Exponentiation by squaring

Exponentiating by squaring is a general method for fast computation of large integer powers of a number. Some variants are commonly referred to as square-and-multiply algorithms or binary exponentiation. In additive notation the appropriate term is double-and-add. These can be of quite general use: for example in modular arithmetic or powering of matrices.

Contents |

Underlying idea

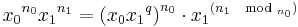

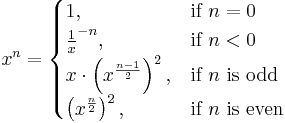

Using the following observation, one can create a recursive algorithm that computes xn for an integer n using squaring and multiplication:

A brief analysis shows that such an algorithm uses log2n squarings and at most log2n multiplications. For n > about 4 this is computationally more efficient than naïvely multiplying the base with itself repeatedly.

2k-ary method

This algorithm calculates the value of xn after expanding the exponent in base 2k. It was first proposed by Brauer in 1939. In the algorithm below we make use of the following function f(0) = (k,0) and f(m) = (s,u) where m = u·2s with u odd.

Algorithm:

- Input

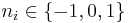

- An element x of G, a parameter k > 0, a non-negative integer n = (nl−1, nl−2, ..., n0)2k and the precomputed values x3, x5, ...,

.

.

- Output

- The element xn in G

1. y := 1 and i := l-1 2. While i>=0 do 3. (s,u) := f(ni) 4. for j:=1 to k-s do 5. y := y2 6. y := y*xu 7. for j:=1 to s do 8. y := y2 9. i := i-1 10. return y

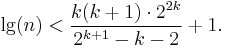

For optimal efficiency, k should be the smallest integer satisfying [1]

Sliding window method

This method is an efficient variant of the 2k-ary method. For example, to calculate the exponent 398 which has binary expansion (110 001 110)2, we take a window of length 3 using the 2k-ary method algorithm we calculate 1,x3,x6,x12,x24,x48,x49,x98,x99,x198,x199,x398. But, we can also compute 1,x3,x6,x12,x24,x48,x96,x192,x199, x398 which saves one multiplication and amounts to evaluating (110 001 110)n2

Here is the general algorithm:

Algorithm:

- Input

- An element 'x' of 'G',a non negative integer n=(ni,ni-1,...,n0)2, a parameter k>0 and the pre-computed values x3,x5,...

.

.

- Output

- The element xn in G

Algorithm:

1. y := 1 and i := l-1

2. while i > -1 do

3. if ni=0 then y:=y2 and i:=i-1

4. else

5. s:=max{i-k+1,0}

6. while ns=0 do s:=s+1

7. for h:=1 to i-s+1 do y:=y2

8. u:=(ni,ni-1,....,ns)2

9. y:=y*xu

10. i:=s-1

11. return y

Note:

- In line 6 the loop finds the longest string of length less than or equal to 'k' which ends in a non zero value. Also not all odd powers of 2 up to

need be computed and only those specifically involved in the computation need be considered.

need be computed and only those specifically involved in the computation need be considered.

Montgomery's ladder technique

Many algorithms for exponentiation do not provide defence against side-channel attacks. Namely, an attacker observing the sequence of squarings and multiplications can (partially) recover the exponent involved in the computation. This is a problem if the exponent should remain secret, as with many public-key cryptosystems. A technique called Montgomery's Ladder[2] addresses this concern.

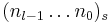

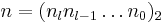

Given the binary expansion of a positive, non-zero integer n=(nk-1...n0)2 with nk-1=1 we can compute xn as follows:

x1=x; x2=x2

for i=k-2 to 0 do

If ni=0 then

x2=x1*x2; x1=x12

else

x1=x1*x2; x2=x22

return x1

The algorithm performs a fixed sequence of operations (up to log n): a multiplication and squaring takes place for each bit in the exponent, regardless of the bit's specific value.

Fixed base exponent

There are several methods which can be employed to calculate xn when the base is fixed and the exponent varies. As one can see, precomputations play a key role in these algorithms.

Yao's method

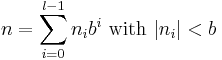

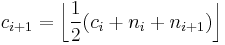

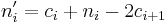

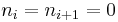

Yao's method is orthogonal to the 2k-ary method where the exponent is expanded in radix b=2k and the computation is as performed in the algorithm above. Let "n", "ni", "b", and "bi" be integers.

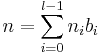

Let the exponent "n" be written as

where

where  for all

for all ![i \in [0,l-1]](/2012-wikipedia_en_all_nopic_01_2012/I/413d6f2b9b821dcd808b6e9a528ab451.png)

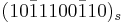

Let xi = xbi. Then the algorithm uses the equality

Given the element 'x' of G, and the exponent 'n' written in the above form, along with the pre computed values xb0....xbl-1 the element xn is calculated using the algorithm below

- y=1,u=1 and j=h-1

- while j > 0 do

- for i=0 to l-1 do

- if ni=j then u=u*xbi

- y=y*u

- j=j-1

- return y

If we set h=2k and bi = hi then the ni 's are simply the digits of n in base h. Yao's method collects in u first those xi which appear to the highest power h-1; in the next round those with power h-2 are collected in u as well etc. The variable y is multiplied h-1 times with the initial u, h-2 times with the next highest powers etc. The algorithm uses l+h-2 multiplications and l+1 elements must be stored to compute xn (see [1]).

Euclidean method

The Euclidean method was first introduced in 'Efficient exponentiation using precomputation and vector addition chains' by P.D Rooij. The algorithm below computes xn using the following equality recursively

where q=

Given the element x in G and the exponent 'n' written as in Yao's method with the pre computed values xb0....xbl-1 the element xn is calculated using the algorithm below

- while true do

- Find M such that nM ≥ ni for all i in [0,l-1]

- Find N ≠ M such that nN ≥ ni for all i in [0,l-1], i ≠ M

- if nN ≠ 0 then

- q=

(nM/nN)

(nM/nN) , xN=xMq·xN and nM=nN mod nN

, xN=xMq·xN and nM=nN mod nN - else break

- return xMnM

The algorithm first finds the largest value amongst the ni and then the supremum within the set of {ni : i ≠ M }. It raises xM to the power q, multiplies this value with xN, and then assigns xN the result of this computation and nM the value nM modulo nN.

Further applications

The same idea allows fast computation of large exponents modulo a number. Especially in cryptography, it is useful to compute powers in a ring of integers modulo q. It can also be used to compute integer powers in a group, using the rule

- Power(x, −n) = (Power(x, n))−1.

The method works in every semigroup and is often used to compute powers of matrices,

For example, the evaluation of

- 13789722341 (mod 2345)

would take a very long time and lots of storage space if the naïve method were used: compute 13789722341 then take the remainder when divided by 2345. Even using a more effective method will take a long time: square 13789, take the remainder when divided by 2345, multiply the result by 13789, and so on. This will take 722340 modular multiplications. The square-and-multiply algorithm is based on the observation that 13789722341 = 13789(137892)361170. So, if we computed 137892, then the full computation would only take 361170 modular multiplications. This is a gain of a factor of two. But since the new problem is of the same type, we can apply the same observation again, once more approximately halving the size.

The repeated application of this algorithm is equivalent to decomposing the exponent (by a base conversion to binary) into a sequence of squares and products: for example

- x13 = x1101bin

- = x(1·2^3 + 1·2^2 + 0·2^1 + 1·2^0)

- = x1·2^3 · x1·2^2 · x0·2^1 · x1·2^0

- = x2^3 · x2^2 · 1 · x2^0

- = x8 · x4 · x1

- = (x4)2 · (x2)2 · x

- = (x4 · x2)2 · x

- = ((x2)2 · x2)2 · x

- = ((x2 · x)2)2 · x → algorithm needs only 5 multiplications instead of 12 (13−1)

Some more examples:

- x10 = ((x2)2·x)2 because 10 = (1,010)2 = 23+21, algorithm needs 4 multiplications instead of 9

- x100 = (((((x2·x)2)2)2·x)2)2 because 100 = (1,100,100)2 = 26+25+22, algorithm needs 8 multiplications instead of 99

- x1,000 = ((((((((x2·x)2·x)2·x)2·x)2)2·x)2)2)2 because 103 = (1,111,101,000)2, algorithm needs 14 multiplications instead of 999

- Worked example (with modulo) for the RSA algorithm.

Example implementations

Computation by powers of 2

This is a non-recursive implementation of the above algorithm in Ruby.

In most statically typed languages, result=1 must be replaced with code assigning an identity matrix of the same size as x to result to get a matrix exponentiating algorithm. In Ruby, thanks to coercion, result is automatically upgraded to the appropriate type, so this function works with matrices as well as with integers and floats. Note that n=n1 is redundant when n=n/2 implicitly rounds towards zero, as lower level languages would do. n[0] is the rightmost bit of the binary representation of n, so if it is 1, the number is odd, if it is zero, the number is even.

def power(x,n) result = 1 while n.nonzero? if n[0].nonzero? result *= x n -= 1 end x *= x n /= 2 end return result end

Runtime example: compute 310

parameter x = 3

parameter n = 10

result := 1

Iteration 1

n = 10 -> n is even

x := x2 = 32 = 9

n := n / 2 = 5

Iteration 2

n = 5 -> n is odd

-> result := result * x = 1 * x = 1 * 32 = 9

n := n - 1 = 4

x := x2 = 92 = 34 = 81

n := n / 2 = 2

Iteration 3

n = 2 -> n is even

x := x2 = 812 = 38 = 6561

n := n / 2 = 1

Iteration 4

n = 1 -> n is odd

-> result := result * x = 32 * 38 = 310 = 9 * 6561 = 59049

n := n - 1 = 0

return result

Runtime example: compute 310

result := 3 bin := "1010" Iteration for digit 2: result := result2 = 32 = 9 1010bin - Digit equals "0" Iteration for digit 3: result := result2 = (32)2 = 34 = 81 1010bin - Digit equals "1" --> result := result*3 = (32)2*3 = 35 = 243 Iteration for digit 4: result := result2 = ((32)2*3)2 = 310 = 59049 1010bin - Digit equals "0" return result

JavaScript-Demonstration: http://home.mnet-online.de/wzwz.de/temp/ebs/en.htm

Calculation of products of powers

Exponentiation by squaring may also be used to calculate the product of 2 or more powers. If the underlying group or semigroup is commutative then it is often possible to reduce the number of multiplication by computing the product simultaneously.

Example

The formula a7×b5 may be calculated within 3 steps:

- ((a)2×a)2×a (four multiplications for calculating a7)

- ((b)2)2×b (three multiplications for calculating b5)

- (a7)×(b5) (one multiplication to calculate the product of the two)

so one gets eight multiplications in total.

A faster solution is to calculate both powers simultaneously:

- ((a×b)2×a)2×a×b

which needs only 6 multiplications in total. Note that a×b is calculated twice, the result could be stored after the first calculation which reduces the count of multiplication to 5.

Example with numbers:

- 27×35 = ((2×3)2×2)2×2×3 = (62×2)2×6 = 722×6 = 31,104

Calculating the powers simultaneously instead of calculating them separately always reduces the count of multiplications if at least two of the exponents are greater than 1.

Using transformation

The example above a7×b5 may also be calculated with only 5 multiplications if the expression is transformed before calculation:

a7×b5 = a2×(ab)5 with ab := a×b

- ab := a×b (one multiplication)

- a2×(ab)5 = ((ab)2×a)2×ab (four multiplications)

Generalization of transformation shows the following scheme:

For calculating aA×bB×...×mM×nN

1st: define ab := a×b, abc = ab×c, ...

2nd: calculate the transformed expression aA−B×abB−C×...×abc..mM−N×abc..mnN

Transformation before calculation often reduces the count of multiplications but in some cases it also increases the count (see the last one of the examples below), so it may be a good idea to check the count of multiplications before using the transformed expression for calculation.

Examples

For the following expressions the count of multiplications is shown for calculating each power separately, calculating them simultaneously without transformation and calculating them simultaneously after transformation.

Example: a7×b5×c3

separate: [((a)2×a)2×a] × [((b)2)2×b] × [(c)2×c] ( 11 multiplications )

simultaneous: ((a×b)2×a×c)2×a×b×c ( 8 multiplications )

transformation: a := 2 ab := a×b abc := ab×c ( 2 multiplications )

calculation after that: (a×ab×abc)2×abc ( 4 multiplications ⇒ 6 in total )

Example: a5×b5×c3

separate: [((a)2)2×a] × [((b)2)2×b] × [(c)2×c] ( 10 multiplications )

simultaneous: ((a×b)2×c)2×a×b×c ( 7 multiplications )

transformation: a := 2 ab := a×b abc := ab×c ( 2 multiplications )

calculation after that: (ab×abc)2×abc ( 3 multiplications ⇒ 5 in total )

Example: a7×b4×c1

separate: [((a)2×a)2×a] × [((b)2)2] × [c] ( 8 multiplications )

simultaneous: ((a×b)2×a)2×a×c ( 6 multiplications )

transformation: a := 2 ab := a×b abc := ab×c ( 2 multiplications )

calculation after that: (a×ab)2×a×ab×abc ( 5 multiplications ⇒ 7 in total )

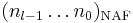

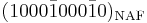

Signed-digit recoding

In certain computations it may be more efficient to allow negative coefficients and hence use the inverse of the base, provided inversion in G is ' fast' or has been precomputed. For example, when computing x2k−1 the binary method requires k−1 multiplications and k−1 squarings . However one could perform k squarings to get x2k and then multiply by x−1 to obtain x2k−1.

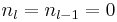

To this end we define the signed-digit representation of an integer  in radix

in radix  as

as

'Signed Binary Representation' corresponds to the particular choice  and

and  . It is denoted by

. It is denoted by  . There are several methods for computing this representation. The representation is not unique, for example take

. There are several methods for computing this representation. The representation is not unique, for example take  . Two distinct signed-binary representations are given by

. Two distinct signed-binary representations are given by  and

and  , where

, where  is used to denote

is used to denote  . Since the binary method computes a multiplication for every non-zero entry in the base 2 representation of

. Since the binary method computes a multiplication for every non-zero entry in the base 2 representation of  , we are interested in finding the signed-binary representation with the smallest number of non-zero entries, that is, the one with minimal Hamming weight. One method of doing this is to compute the representation in non-adjacent form, or NAF for short, which is one that satisfies

, we are interested in finding the signed-binary representation with the smallest number of non-zero entries, that is, the one with minimal Hamming weight. One method of doing this is to compute the representation in non-adjacent form, or NAF for short, which is one that satisfies  and denoted by

and denoted by  . For example the NAF representation of 478 is equal to

. For example the NAF representation of 478 is equal to  . This representation always has minimal Hamming weight. A simple algorithm to compute the NAF representation of a given integer

. This representation always has minimal Hamming weight. A simple algorithm to compute the NAF representation of a given integer  with

with  is the following:

is the following:

- for

to

to  do

do

- return

Another algorithm by Koyama and Tsuruoka does not require the condition that  ; it still minimizes the Hamming weight.

; it still minimizes the Hamming weight.

Alternatives and generalizations

Exponentiation by squaring can be viewed as a suboptimal addition-chain exponentiation algorithm: it computes the exponent via an addition chain consisting of repeated exponent doublings (squarings) and/or incrementing exponents by one (multiplying by x) only. More generally, if one allows any previously computed exponents to be summed (by multiplying those powers of x), one can sometimes perform the exponentiation using fewer multiplications (but typically using more memory). The smallest power where this occurs is for n=15:

![a^{15} = x \times (x \times [x \times x^2]^2)^2 \!](/2012-wikipedia_en_all_nopic_01_2012/I/9a13063bfadff42ccb6befbce89e21e4.png) (squaring, 6 multiplies)

(squaring, 6 multiplies)![a^{15} = x^3 \times ([x^3]^2)^2 \!](/2012-wikipedia_en_all_nopic_01_2012/I/503e89254db336f4a5cd11d1c956c1ce.png) (optimal addition chain, 5 multiplies if x3 is re-used)

(optimal addition chain, 5 multiplies if x3 is re-used)

In general, finding the optimal addition chain for a given exponent is a hard problem, for which no efficient algorithms are known, so optimal chains are typically only used for small exponents (e.g. in compilers where the chains for small powers have been pre-tabulated). However, there are a number of heuristic algorithms that, while not being optimal, have fewer multiplications than exponentiation by squaring at the cost of additional bookkeeping work and memory usage. Regardless, the number of multiplications never grows more slowly than Θ(log n), so these algorithms only improve asymptotically upon exponentiation by squaring by a constant factor at best.

See also

- Modular exponentiation

- Vectorial addition chain

- Montgomery reduction

- Non-adjacent form

- Addition chain

![x^n = \prod_{i=0}^{l-1} {x_i}^{n_i} = \prod_{j=1}^{h-1}{\bigg[\prod_{n_i=j} x_i\bigg]}^j](/2012-wikipedia_en_all_nopic_01_2012/I/eef13971d6f968ceb72d35d15b8cb935.png)